Cooling Regulations for Data Center Compliance

September 10, 2025

How Do Cooling Systems Enable Cloud Elasticity?

October 6, 2025Are today’s data center cooling systems ready for the AI era?

Try to answer this question by visualizing a marathon runner wearing walking shoes — they’re simply not built for the pace, pressure, or performance demanded by the race. In the same way, cooling systems that once met traditional needs are quickly proving unfit for the intensity, heat density, and precision cooling demands of AI-driven data centers.

The challenge lies in the growing capacities AND the traditional methods’ capabilities. AI workloads create unprecedented thermal management demands that expose limitations in conventional cooling designs. From scalability bottlenecks to inefficiencies in heat removal, these gaps will only widen as AI usage accelerates.

In this article, we identify the critical limitations that stand between current cooling capabilities and the needs of tomorrow’s AI-powered data centers.

What Cooling Gaps Are Holding Back AI Data Centers?

Thermal Density Spikes Are Overwhelming Existing Cooling Capacity

AI workloads are driving thermal densities far beyond the limits of legacy (and even many modern) cooling systems. Rack densities of 50-100kW are becoming the norm, and chip-level heat generation is hitting record highs. At scale, this compounds into massive cooling challenges that strain both site-level and regional infrastructure, such as:

- Rack densities reaching 50-100 kW, fueled by GPUs like NVIDIA’s Blackwell, drawing up to 1,200 watts each.

- Hyperscale computing power is consolidating at an unprecedented pace, accounting for 41% of global capacity today and expected to exceed 60% by 2029.

- The combined effect of hotter racks and higher aggregate demand will cause infrastructure to struggle to keep up.

Water and Energy Constraints Limit Cooling Options

AI workloads magnify the pressure on the two most limited resources in data center cooling: water and energy. However, efforts to optimize the use of either resource trigger a new chain of issues, where gains in one come at the expense of the other (known as the trade-off between PUE and WUE), leaving operators with sustainability, cost, and compliance challenges, like:

- Liquid cooling could consume 1.7 trillion gallons annually by 2027, with individual hyperscale sites requiring 50M+ gallons/year, much of which would be permanently lost to evaporation.

- Air cooling can consume up to 40% of a facility’s total electricity, reducing the power available for other community and industrial needs.

- National and regional strain on infrastructure is already evident, as seen in Ireland, where data centers consumed around 20% of electricity in 2024, prompting a halt on new builds in Dublin.

Unreliable Power Infrastructure Disrupts Cooling Performance

AI cooling’s heavy power demands collide with grid infrastructure that was never designed for continuous, high-throughput operation. This mismatch can compromise cooling performance and system reliability. For example:

- Existing grids are built for predictable, patterned demand, while AI runs at full tilt 24/7.

- According to a 2014 Department of Energy study, the average age of large power transformers, which handle 90% of U.S. electricity flow, is more than 40 years.

- AI training load fluctuations force cooling systems to operate outside optimal design parameters, lowering efficiency and accelerating wear.

- Voltage or frequency variations in the grid degrade chiller and pump performance, risking facility-wide cooling instability.

Compressed Deployment Timelines Compromise Cooling Effectiveness

AI adoption has condensed data center construction schedules to the point where cooling systems are often deployed under extreme time pressure. Their design, procurement, and installation are increasingly done in parallel instead of sequentially, raising operational risks and long-term efficiency concerns:

- Market expectations now require the delivery of hundreds of megawatts of capacity within 12-18 months, compared to the traditional timeline of more than two years from conception to commissioning.

- According to STL Partners’ research, for every month of delay in the completion of a data centre, the developer incurs USD14.2 million of lost revenue, cost overruns, and contractual penalties.

- High-speed deployments, such as delivering more than 40 MW in just 16 months, often carry higher operational risk and lower initial cooling efficiency.

How Should Data Centers Change for the Future of AI?

Given these widening gaps, it’s unrealistic to expect traditional cooling systems to enter the AI era with confidence. Meeting tomorrow’s demands will require rethinking thermal management from the ground up. Specifically, an AI-ready data center cooling system is one that closes the gaps above through the following capabilities:

- Modular Cooling Architecture: Modular cooling enables data centers to expand quickly without overbuilding, ensuring that capacity aligns with demand as it grows unpredictably. By incorporating scalable cooling capabilities, operators can adapt in real time to changing thermal loads while optimizing both energy efficiency and sustainability.

- Multi-Mode Cooling Readiness: AI facilities perform best when they can draw on both liquid and air cooling, allowing operators to match the right mode to the right workload and environmental conditions. Hybrid configurations not only manage thermal hotspots more effectively but also add inherent redundancy if one mode is compromised.

- Intelligent Thermal Controls: AI-powered control systems can optimize cooling in real time, adjusting equipment settings to match fluctuating workloads and environmental conditions. This approach improves energy efficiency, reduces water consumption, and enhances resilience through predictive fault detection.

- Redundancy by Design: With AI racks drawing 50-100kW, a single cooling failure can lead to costly outages and severe performance disruptions. Redundant architectures and independent cooling loops ensure thermal stability even if one system component goes offline.

- Sustainable Cooling Practices: Sustainable cooling approaches reduce environmental impact and lower operational costs in high-density AI environments. By combining energy-efficient technologies and green innovations, facilities can balance the energy needs of AI workloads with the needs of surrounding communities

Closing the Cooling Gaps in the AI Era

Preparing data center cooling for the AI era starts with a clear understanding of the challenges ahead and the strategic choices needed to overcome them. Closing these gaps calls for systems that align with both immediate operational needs and long-term scalability.

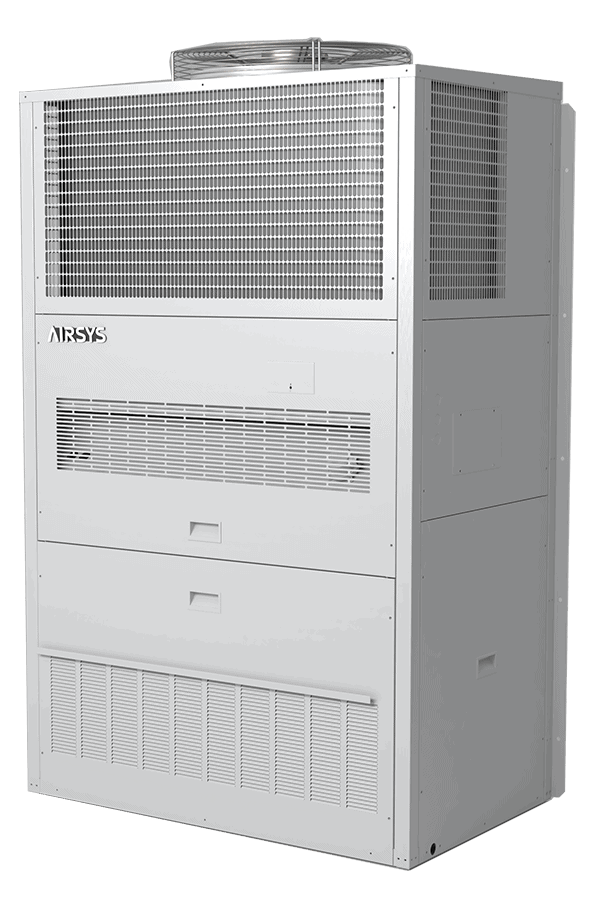

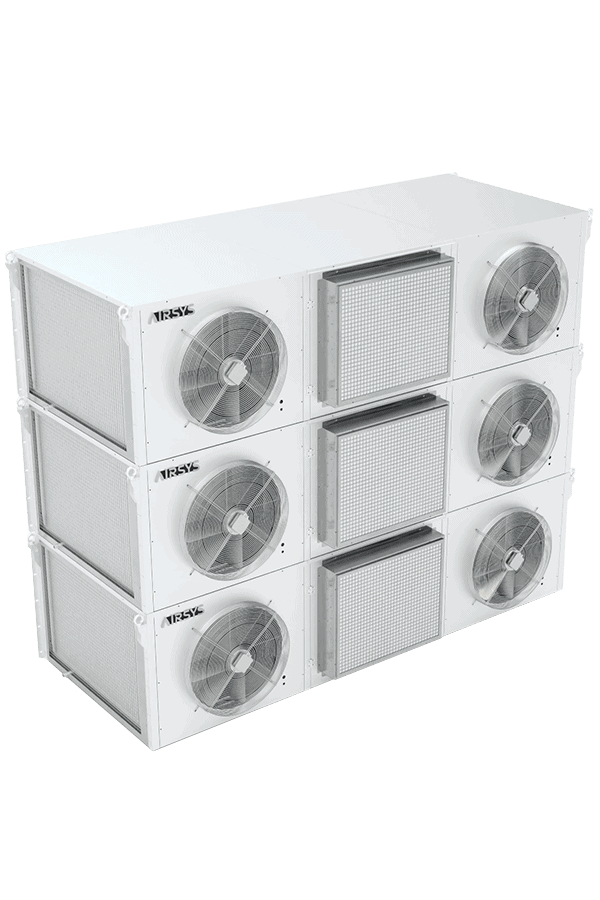

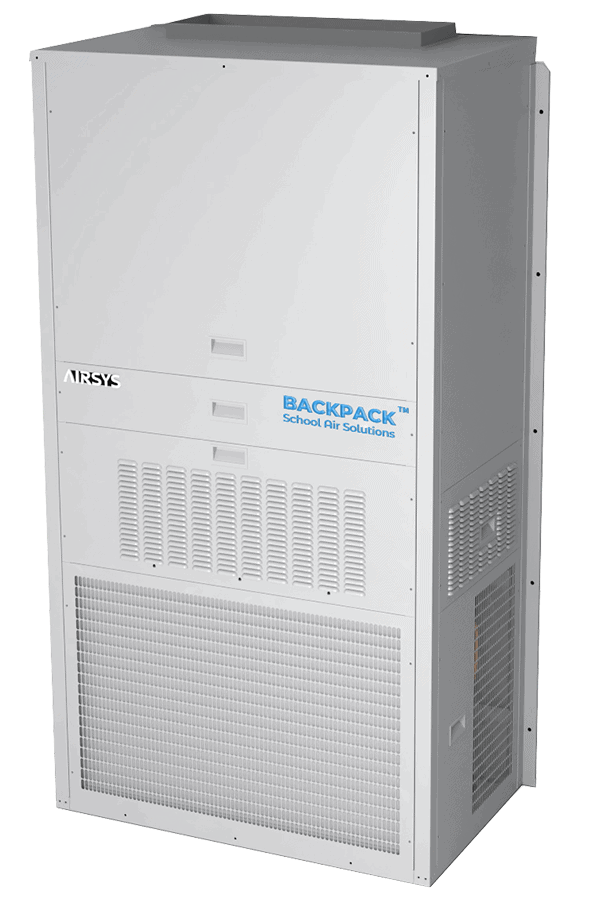

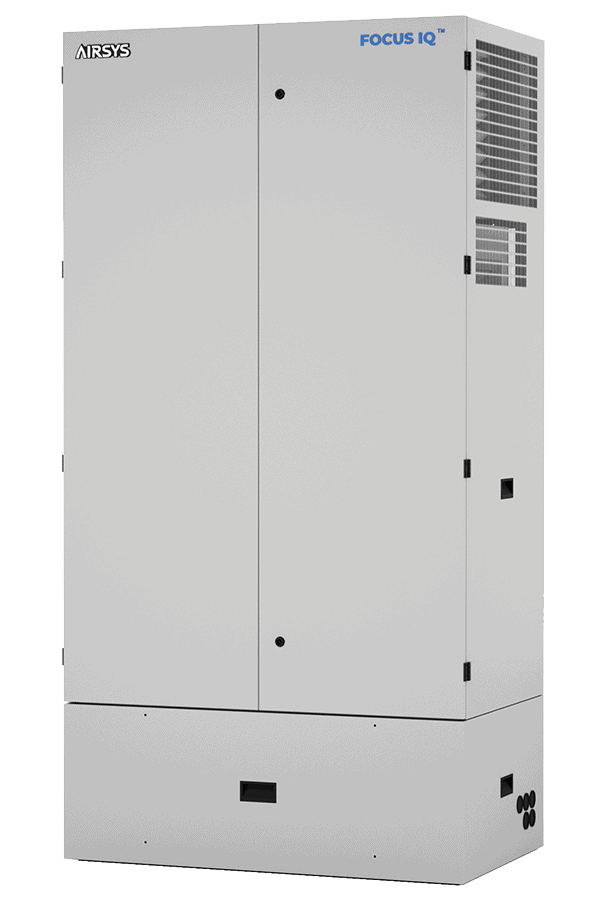

AIRSYS delivers data center cooling solutions designed for long-term performance in high-density, AI-driven environments. The PowerOne™ system allows operators to maximize computing capacity and revenue potential by significantly reducing cooling energy use, transforming cooling from a cost center into a strategic enabler. Contact us to explore how PowerOne can help position your data center for sustained AI growth.