Is Liquid Cooling Worth it for Your Data Center?

January 23, 2025

A School HVAC Upgrade — Is It Really Necessary?

February 18, 2025The intersection of AI and data centers represents one of the most significant data center trends of this decade. As artificial intelligence reshapes industries and drives innovation, it’s fundamentally transforming how data centers are designed, built, and operated. The impact is so profound that AI almost creates its own niche within the data center world.

This article explores the relationship between AI and data centers. Specifically, we examine how AI workloads drive the evolution of dedicated AI data centers, their unique architectural requirements, and the critical role of cooling systems in maintaining these high-performance environments.

The Rise of AI Data Centers

Almost every industry is now seeking new AI-driven capabilities to streamline operations and enhance outcomes. From consumer products and business software to healthcare needs and manufacturing processes, AI is becoming deeply embedded in modern technology. As organizations worldwide adopt AI at an unprecedented pace, the demand for data processing has surged to historic levels. By 2030, an estimated 70% of total data center demand will be driven by the need to support sophisticated AI applications.

The sheer computational intensity of AI workloads is pushing traditional data centers beyond their limits. AI requires 10 times the resources of traditional cloud computing, making it nearly impossible to run efficiently in on-premise or in-house data centers. These facilities simply lack the power, cooling capacity, and redundancy needed to sustain AI’s immense processing demands. This paradigm shift drives the emergence of dedicated AI data centers purposely built to handle the unprecedented scale of AI computing while ensuring efficiency, reliability, and sustainability.

What is an AI Data Center?

An AI data center is a specialized facility designed to support the immense computational demands of artificial intelligence workloads. Unlike traditional data centers, which primarily handle cloud computing and general enterprise applications, AI data centers are built with high-performance infrastructure to train and deploy complex machine learning models. These facilities process vast amounts of data, utilizing advanced techniques to power AI-driven applications across various industries.

Within this segment, AI data centers are further categorized into training AI data centers, which perform intensive computations for developing and refining AI models, and inference AI data centers, which deploy trained models to generate real-time insights and predictions. With their high efficiency, substantial power capacity, and specialized infrastructure, AI data centers are essential for scaling AI innovation and integrating intelligent solutions into everyday operations.

How to Build an AI Data Center?

The architecture of an AI data center requires a complete reimagining of traditional data center design principles to support intensive AI workloads. While traditional CPU-powered data centers operate at around 12kW per rack, AI workloads demand over 40kW per rack and are projected to double within 1-2 years, necessitating fundamental changes in infrastructure design.

Key architectural components of AI data centers include:

- Computing Resources: AI data centers replace traditional CPU-based servers with high-power computing units designed for AI workloads. These include GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), enabling the parallel processing necessary for deep learning and complex model training.

- Data Storage Infrastructure: High-performance, scalable storage systems support massive datasets required for AI model development and deployment, emphasizing rapid data access and processing.

- Network Architecture: AI workloads rely on parallel processing across multiple GPU and TPU clusters, necessitating low-latency, high-speed networking between them. This includes specialized cabling and high-bandwidth interconnects to minimize data transfer delays and optimize performance within and between server racks.

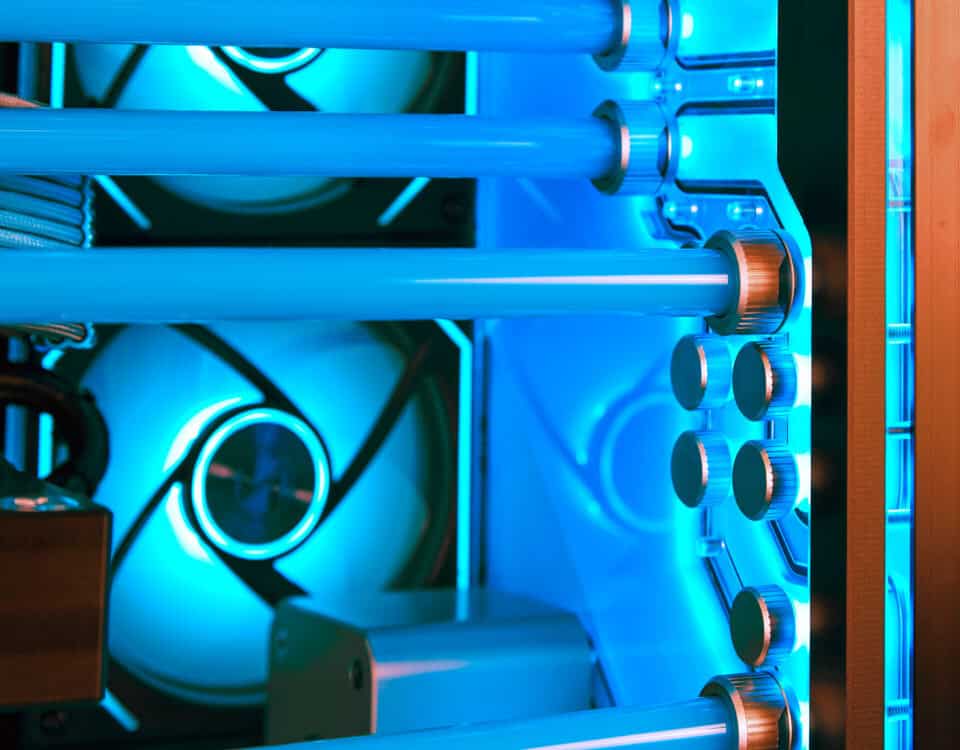

- Cooling Solutions: At hyperscalers and AI data centers, power densities exceed 40kW per rack, making traditional air cooling slowly inefficient. Advanced cooling technologies are essential to managing the intense heat generated by AI computing resources.

AI Data Centers and Cooling – Tackling the Heat Challenge

AI data centers are among the most power-hungry facilities in the digital infrastructure due to three key factors:

- GPU clusters consume significant amounts of energy due to their high-speed, complex computations.

- While space-efficient, high-density server arrangements generate concentrated heat loads.

- AI processes run continuously, with models processing and learning 24/7, resulting in an energy drain.

As AI adoption accelerates, Moody’s report predicts that AI-driven data center energy consumption will increase by 43% annually, making cooling one of the most pressing challenges in AI infrastructure.

However, cooling challenges in AI data centers are particularly complex. First, the specialized hardware used in AI applications (such as GPUs, NPUs, and FPGAs) generates significantly more heat than traditional computing components. Second, AI chips’ compact layout creates concentrated heat zones, making heat dissipation more difficult. And if that’s not enough, existing cooling methods, which are primarily designed for lower power densities, are struggling to keep up.

Given these challenges, AI data centers must rethink their thermal management strategies to ensure continuous operation and prevent performance degradation. Some key approaches include:

- Liquid Cooling Systems: Liquid cooling technology utilizes liquid’s superior heat transfer capabilities to efficiently absorb and dissipate heat from IT equipment rather than transferring it with air. While the initial investment is higher, its energy efficiency, reliability, and sustainability benefits make it an essential solution for managing the intense thermal demands of AI workloads.

- Heat Reuse Implementation: AI’s high energy use presents an opportunity to repurpose waste heat to improve sustainability. Heat reuse is gaining traction, particularly in Europe, where regulations incentivize data centers to convert excess heat into useful energy. As AI workloads generate more heat, investments in heat reuse strategies could become more financially and environmentally beneficial.

- AI-Driven Cooling Analytics: Advanced analytics and sensor systems enable real-time cooling optimization. This approach allows precise temperature control and preventive maintenance, creating a dynamic cooling infrastructure that adapts to varying AI workload demands.

Is Your Data Center AI-Ready?

As the relationship between AI and data centers continues to evolve, effective cooling strategies have become a critical differentiator. Data centers that are quick to embrace the above technologies will gain a competitive edge in the race to meet AI’s growing demands. These innovative solutions will not only help data centers maintain operational efficiency but also position them as leaders in the rapidly expanding AI market.

At AIRSYS, we have the expertise and technology to help forward-thinking data centers transition smoothly into the AI era. Our innovative LiquidRack™ system takes liquid cooling to new heights with its advanced features. Additionally, all of our data center cooling solutions are equipped with AI-driven monitoring and energy-efficient, sustainable systems, ensuring that your facility stays ahead of the curve. Contact us today to learn more about optimizing your cooling infrastructure for AI operations.