Immersion or Liquid Spray Cooling for AI Data Centers: Which Fits Best?

January 26, 2026

What I Learned at PTC: From Acceleration to Stewardship

January 30, 2026Investment in the data center industry shows no signs of slowing. In 2025, spending by the six U.S. hyperscalers (Microsoft, Amazon, Alphabet, Oracle, Meta Platforms, and CoreWeave) approached $400 billion, with projections reaching $500 billion in 2026 and $600 billion in 2027. Moody’s Global Data Centers 2026 Outlook further estimates that global data center investment could exceed $3 trillion over the next five years.

The plans are ambitious. But a critical question remains: will there be enough electricity to support the computing capacity these facilities are meant to deliver?

The answer is complex, but one reality is already clear: power strategy has become the foundation of AI data center design. In this new era, the operators who succeed will be those who can secure power and turn it into usable compute. The challenge, then, is how to access and maximize power in a world where electrical capacity is increasingly constrained.

What is the Power deficit for data centers?

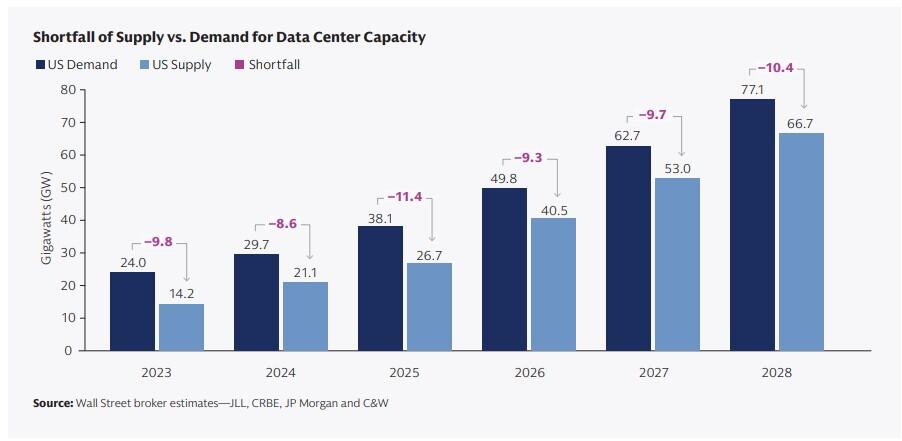

The data center power deficit refers to the growing gap between how much electricity data centers need and how much power grids can actually provide. This gap between supply and demand is only going to widen as AI workloads continue to increase, rack densities continue to rise, and hyperscalers continue to expand faster than utilities can respond. As a result, for many operators, the primary constraint is no longer space or equipment, but simply securing enough electricity to support operations.

This is not a theoretical scenario; this imbalance is already measurable. Goldman Sachs estimates that by 2028, the power gap for data centers will reach 10 gigawatts (GW), roughly the amount of electricity needed to power about 7.5 million homes for an entire year. As securing power becomes harder, this growing shortfall forces operators to rethink how they plan, allocate, and use power capacity.

Data centers can’t wait for more power

Much of the industry discussion around the data center power shortage focuses on adding new power sources. Renewables like solar, advanced nuclear projects, battery storage, and natural gas turbines are frequently cited as long-term solutions.

In practice, however, these options are often impractical in modern AI deployments. Lengthy permitting processes, grid interconnection delays, regulatory hurdles, and complex construction timelines mean new power sources rarely become accessible fast enough and cheap enough to keep up with the accelerating computing demand.

That’s why most data centers don’t have the luxury of waiting for “new power”. The reality is that the power available today is the power operators must learn to optimize. With the data center power shortage intensifying, getting more compute out of every available watt must become a top priority. Without it, data centers risk limiting growth and falling behind on performance and competitiveness.

Data center cooling: The fastest path to more compute

After IT equipment, cooling is the second-largest energy consumer in data centers, often accounting for up to 40% of total facility energy use. This makes cooling one of the most effective areas for optimizing power usage in high-density AI environments, where even modest efficiency gains can free up meaningful amounts of power.

What does cooling optimization mean?

Every kilowatt spent on cooling is a kilowatt not available to power IT, and therefore, a kilowatt not driving compute. By reducing the power required for cooling, more electricity can be allocated to IT systems, allowing data centers to increase power-to-compute capacity without drawing additional power from the grid.

The performance impact of cooling optimization

Cooling improvements deliver four measurable performance advantages:

- Speed to capacity: Cooling optimization is the fastest way to access “old-new” power, enabling immediate increases in usable capacity without waiting for new power or infrastructure expansion.

- Improved Power Usage Effectiveness (PUE): Reducing cooling energy lowers non-IT power consumption, directly improving facility-level energy efficiency metrics.

- Improved Power Compute Effectiveness (PCE): Shifting power from cooling to IT increases the proportion of total allocated power that can be converted into activated power.

- Improved Return on Invested Power (ROIP): Redirecting power away from infrastructure overhead toward revenue-generating compute increases the financial return per kilowatt invested.

How to optimize cooling to free up more power?

The following approaches help reduce cooling energy demand, making more power available for IT and compute generation:

- Adopt high-efficiency liquid cooling: Spray liquid cooling and other liquid-based cooling solutions remove heat more efficiently than air, reducing fan energy, airflow requirements, and overall cooling power consumption.

- Reduce or eliminate chiller and compressor dependence: Chiller-free or reduced-chiller architectures significantly lower cooling energy consumption, especially in high-density and AI-driven environments.

- Recover and reuse waste heat: Capturing and reusing waste heat improves overall energy utilization and reduces the net cooling load on the facility.

- Integrate controls and intelligent monitoring: Incorporating AI-driven control systems and real-time monitoring optimizes cooling performance dynamically, ensuring energy is used only where and when it’s needed.

PowerOne™: Cooling optimization in action

PowerOne is AIRSYS’ flagship portfolio of intelligent, high-efficiency cooling systems that enable ultra-high-density data centers to convert cooling power into greater computing capacity. To achieve this, PowerOne employs multiple cooling optimization strategies, including compressor-free operation, advanced liquid and hybrid cooling architectures, and integrated intelligent control systems. Together, these capabilities help operators improve efficiency AND effectiveness, increasing usable compute capacity and lowering operational costs within the same facility footprint.

Doing more with the power you have

Powerful data centers are those that best manage the power they already have and allocate it strategically to maximize compute output. The good news is that even as power availability tightens, much of the capacity needed to support growth is already within reach, embedded in the data center itself through smarter, more efficient technologies.

AIRSYS helps data center operators turn these challenges into opportunities with advanced cooling solutions. If you’re looking to maximize compute output within your current power envelope, contact us to explore how cooling optimization can help you move forward, faster and more efficiently.